1. W𝗵𝗮𝘁 𝗶𝘀 𝘁𝗵𝗲 𝗱𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝗰𝗲 𝗯𝗲𝘁𝘄𝗲𝗲𝗻 𝘀𝘂𝗽𝗲𝗿𝘃𝗶𝘀𝗲𝗱 𝗮𝗻𝗱 an 𝘀𝘂𝗽𝗲𝗿𝘃𝗶𝘀𝗲𝗱 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴?

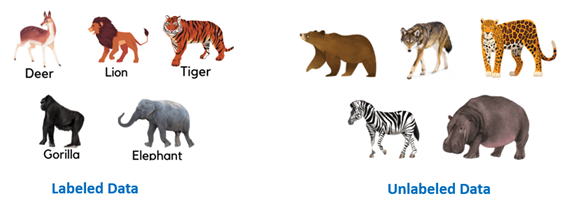

Supervised learning involves training a model on labeled data to make predictions, while unsupervised learning involves finding patterns, relationships, and structures in unlabeled data without specific target outcomes.

Labeled data refers to sets of data that are given tags or labels, and thus made more meaningful.

2.𝗪𝗵𝗮𝘁 𝗶𝘀 𝘁𝗵𝗲 𝗖𝗲𝗻𝘁𝗿𝗮𝗹 𝗟𝗶𝗺𝗶𝘁 𝗧𝗵𝗲𝗼𝗿𝗲𝗺 (CLT) 𝗮𝗻𝗱 𝘄𝗵𝘆 𝗶𝘀 𝗶𝘁 𝗶𝗺𝗽𝗼𝗿𝘁𝗮𝗻𝘁?

The Central Limit Theorem (CLT) states that the distribution of sample means approximates a normal distribution, even if the population isn’t normally distributed. It is crucial in inferential statistics as it allows us to make inferences about a population based on a sample.

A normal distribution is a symmetrical, bell-shaped distribution with no skew.

3.E𝘅𝗽𝗹𝗮𝗶𝗻 𝘁𝗵𝗲 𝗰𝗼𝗻𝗰𝗲𝗽𝘁 𝗼𝗳 𝗿𝗲𝗴𝘂𝗹𝗮𝗿𝗶𝘇𝗮𝘁𝗶𝗼𝗻 𝗶𝗻 𝗺𝗮𝗰𝗵𝗶𝗻𝗲 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴.

Regularization is a technique used to prevent overfitting in machine learning models. It adds a penalty term to the loss function, discouraging complex models and promoting simplicity, ultimately improving the model’s generalization performance.

4.𝗪𝗵𝗮𝘁 𝗶𝘀 𝗳𝗲𝗮𝘁𝘂𝗿𝗲 𝘀𝗲𝗹𝗲𝗰𝘁𝗶𝗼𝗻 𝗮𝗻𝗱 𝘄𝗵𝘆 𝗶𝘀 𝗶𝘁 𝗶𝗺𝗽𝗼𝗿𝘁𝗮𝗻𝘁?

Feature selection is the process of selecting the most relevant and informative features from a dataset. It helps improve model performance by reducing dimensionality, eliminating noise, and enhancing interpretability.

5.𝗪𝗵𝗮𝘁 𝗶𝘀 𝘁𝗵𝗲 𝗱𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝗰𝗲 𝗯𝗲𝘁𝘄𝗲𝗲𝗻 𝗯𝗮𝗴𝗴𝗶𝗻𝗴 𝗮𝗻𝗱 𝗯𝗼𝗼𝘀𝘁𝗶𝗻𝗴?

Bagging and boosting are ensemble learning techniques. Bagging involves training multiple models independently on different subsets of the training data and aggregating their predictions, while boosting focuses on sequentially training models, giving more weight to misclassified instances to improve overall performance.

6.𝗪𝗵𝗮𝘁 𝗶𝘀 𝗰𝗿𝗼𝘀𝘀-𝘃𝗮𝗹𝗶𝗱𝗮𝘁𝗶𝗼𝗻 𝗮𝗻𝗱 𝘄𝗵𝘆 𝗶𝘀 𝗶𝘁 𝘂𝘀𝗲𝗳𝘂𝗹?

Cross-validation is a technique used to evaluate a model’s performance by partitioning the data into multiple subsets. It helps assess the model’s ability to generalize to unseen data and aids in hyperparameter tuning and model selection.

7.𝗘𝘅𝗽𝗹𝗮𝗶𝗻 𝘁𝗵𝗲 𝗯𝗶𝗮𝘀-𝘃𝗮𝗿𝗶𝗮𝗻𝗰𝗲 𝘁𝗿𝗮𝗱𝗲𝗼𝗳𝗳.

The bias-variance tradeoff refers to the balance between a model’s ability to accurately capture the true underlying patterns (low bias) and its sensitivity to fluctuations in the training data (low variance). Finding the optimal tradeoff is crucial to avoid underfitting or overfitting.

8.𝗪𝗵𝗮𝘁 𝗶𝘀 𝗔/𝗕 𝘁𝗲𝘀𝘁𝗶𝗻𝗴 𝗮𝗻𝗱 𝗵𝗼𝘄 𝗶𝘀 𝗶𝘁 𝘂𝘀𝗲𝗱 𝗶𝗻 𝗱𝗮𝘁𝗮 𝘀𝗰𝗶𝗲𝗻𝗰𝗲?

A/B testing is a statistical technique used to compare two versions of a variable (A and B) to determine which performs better. In data science, it is commonly used to evaluate the impact of changes in a product or system, helping in decision-making and optimization.

9.𝗛𝗼𝘄 𝗱𝗼 𝘆𝗼𝘂 𝗵𝗮𝗻𝗱𝗹𝗲 𝗺𝗶𝘀𝘀𝗶𝗻𝗴 𝘃𝗮𝗹𝘂𝗲𝘀 𝗶𝗻 𝗮 𝗱𝗮𝘁𝗮𝘀𝗲𝘁?

Missing values can be handled through techniques such as imputation (replacing missing values with estimated values), deletion (removing instances with missing values), or using algorithms specifically designed to handle missing data.

10.𝗪𝗵𝗮𝘁 𝗶𝘀 𝘁𝗵𝗲 𝗱𝗶𝗳𝗳𝗲𝗿𝗲𝗻𝗰𝗲 𝗯𝗲𝘁𝘄𝗲𝗲𝗻 𝗼𝘃𝗲𝗿𝗳𝗶𝘁𝘁𝗶𝗻𝗴 𝗮𝗻𝗱 𝘂𝗻𝗱𝗲𝗿𝗳𝗶𝘁𝘁𝗶𝗻𝗴?

Overfitting occurs when a model performs well on the training data but poorly on unseen data due to capturing noise or irrelevant patterns. Underfitting happens when a model is too simple and fails to capture the underlying patterns in the data.